Understanding Agents: Improving prompts to enhance LLM planning and function calling

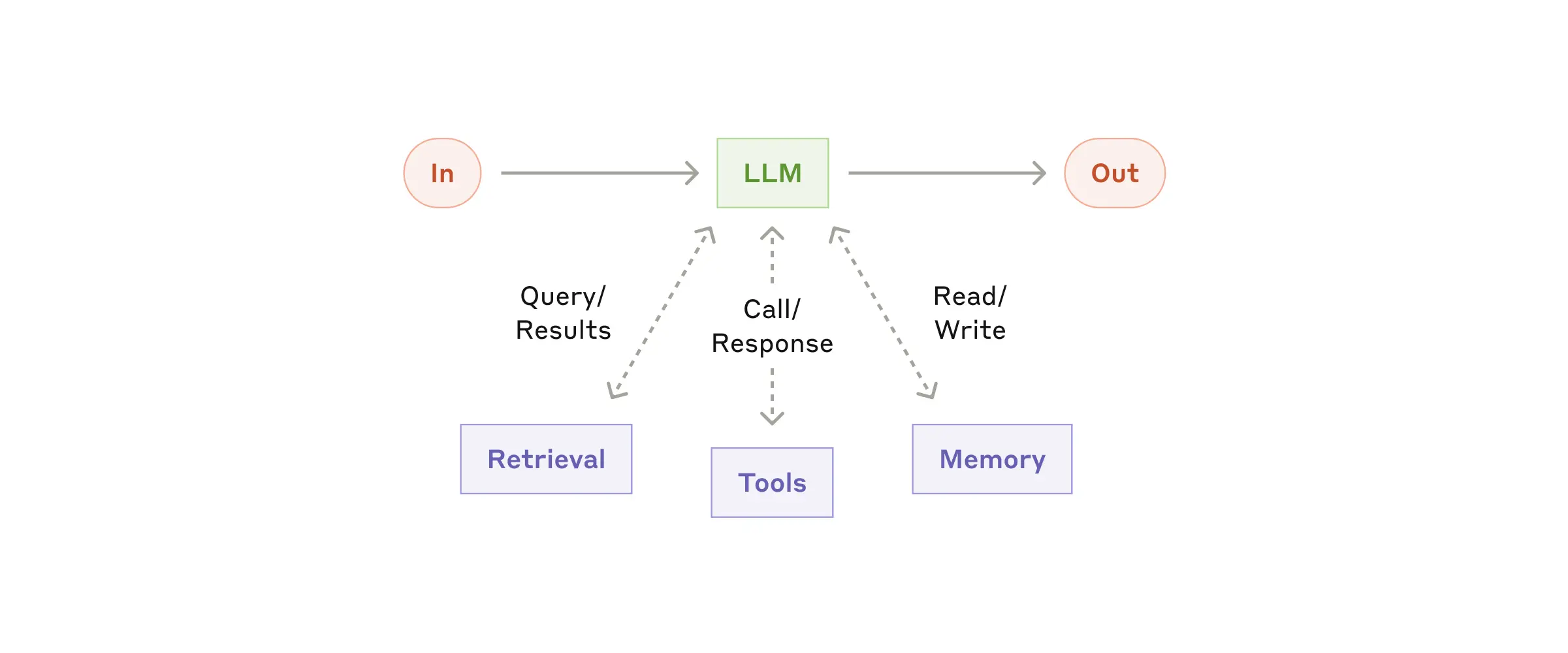

Agents are designed to automate tasks with greater autonomy and adaptability than traditional process automation workflows. Leveraging Large Language Models (LLMs), agents are better equipped to handle dynamic and complex scenarios. This guide introduces the concept of agents, distinguishes them from other techniques, and explores practical prompt engineering strategies to enhance the planning phase of agent-driven workflows.

Agents are digital entities capable of acting independently and interacting within the digital world. While the exact origin of the term "agent" is unclear, it likely stems from Reinforcement Learning vocabulary. The true game-changer for agents lies in their ability to work autonomously, enabling the automation of long-tail tasks that are typically outside the scope of larger, monolithic projects.

Agents vs Workflows

Anthropic offers a useful distinction between Agents and "Agent-like" workflows as outlined in their research. Agent-like workflows refer to scenarios where LLMs and tools are combined within predefined pipelines, while Agents operate autonomously

Agents are increasingly versatile, excelling in two distinct scenarios: they can handle highly specific, singular tasks that previously required custom "throwaway scripts," and they can tackle complex projects that once demanded extensive development efforts. This flexibility in automation is reshaping how we approach productivity enhancement. While the concept of automating business processes isn't new, Robotic Process Automation (RPA) has been around for years, the breakthroughs in Agent technology can be attributed to three key advancements in recent years:

- Natural Language Interaction: Large Language Models (LLMs) enable seamless communication with agents in natural language, removing the need for domain-specific programming expertise.

- Tool Integration: Agents now easily integrate with tools and other agents, leveraging LLMs to simplify what once required glue-code, APIs, and complex ETL pipelines. Even in more challenging scenarios, fine-tuning LLMs or slight adjustments enable smooth collaboration.

- Adaptability: Unlike traditional rule-based RPAs, modern agents can plan and handle slight variations in tasks, allowing for greater flexibility and robustness.

Trade-Offs and Hype

In many cases, a full-fledged multi-agent system may not be the most practical or efficient choice compared to a well-defined rule-based solution or a single optimized LLM call. As with any machine learning application, it's essential to evaluate multiple metrics—such as cost, latency, and performance—before committing to such an approach. Furthermore, what is often marketed as a sophisticated agentic system is, in reality, a combination of LLMs running in a loop with environmental feedback and access to external tools.

Optimizing Prompts for Efficient Planning

The main difference of an agent workflow from some common implemented patterns (e.g. Orchestrators DAGs Pipelines, Queues) in software engineering, is that there is an LLM planning, adapting the steps and how to use those tools. This difference is even more important in more complex tasks or never-seen tasks. Therefore, effective planning is critical for maximizing the efficiency of agents. Below, we explore several strategies and techniques designed to improve prompt engineering for planning tasks.

Chain of Thought (CoT)

Chain of Thought (CoT) is one the first and most impactful breakthroughs techniques in prompt refineement. The essence of it is to break complex problems into smaller, sequential steps. By guiding the LLM to focus on one step at a time, CoT provides a more structured output. While it doesn't inherently integrate external tools or world knowledge, it served as a precursor to more advanced techniques for LLMs.

Tree of Thoughts (ToT)

Building upon CoT, ToT introduces branching, allowing multiple solutions to be explored simultaneously and overcoming token-level constraints of sequential processing. It encourages exploration of alternative solutions. For those familiar with traditional machine learning, it resembles ensembling techniques.

ToT Prompting

Tree-of-Thought Prompting is inspired on ToT papers, but uses it in a simple prompting technique, encouraging exploration of multiple paths within the same prompt.

Directional Stimulus Prompting (DSP)

This technique leverages the model's ability to detect subtle cues in the input prompt and uses those cues to nudge the model's responses in a particular direction. In the original paper, a tuneable policy LM is trained to generate the stimulus/hint and to act as a guide, steering the model's responses in a desired direction. This method allows for increased control and adaptability without modifying the underlying LLM.

Self-Consistency

Self-Consistency involves sampling multiple reasoning paths for the same problem and selecting the most consistent answer. This technique increases reliability by minimizing the impact of randomness in LLM outputs, especially for complex, ambiguous tasks. It is similar to Tree-of-Thoughts, but while one is based on frequency or consistency of outputs the other is based on the evaluation of intermediate reasoning steps.

Few-Shot Prompting

Few-shot prompting allows the model to "learn" on the fly by including a few examples in the prompt. This helps it "learn" the specific pattern of the answer the user wants. This works because the model leverages the patterns encoded during its pretraining phase to generalize from the examples in the prompt.

Reasoning Models

For some types of LLMs (e.g. DeepSeek-R1) few-shot prompting can degrades its performance. In those cases, directly describe the problem without using examples on the prompt may generate better results.

Response & Act (ReAct) framework

ReAct combines reasoning with action, enabling agents to generate intermediate steps while interacting with tools or APIs. This approach combines CoT's "reasoning stimulus" with real-time action, making it especially effective for multi-step tasks requiring external tool integration. Now is seen more as a standard paradigm to create agents with LLMs that are suitable for function calling.

Other Techniques

The landscape of techniques for optimizing agents and LLM continues to expand. For example, Dynamic Prompt Composition involves real-time generation and refinement of prompts based on task-specific requirements. For a broader overview of cutting-edge techniques, check this detailed survey: "The Prompt Report: A Systematic Survey of Prompting Techniques".

Conclusion

Large Language Models (LLMs) are driving significant advancements in task automation through agent-based systems. These systems combine the natural language processing capabilities of LLMs with tool integration, enabling more flexible and adaptable automation compared to traditional rule-based approaches. While agents show promise for handling complex, dynamic tasks, it's important to maintain a pragmatic perspective. In many cases, simpler solutions like well-defined rule-based systems or optimized single LLM calls may be more efficient and cost-effective. As we move forward, it's crucial to carefully evaluate the practical applications of agent-based systems, considering factors such as cost, latency, and performance, to ensure their deployment aligns with specific business needs and efficiency goals.

References and Additional Resources

- Anthropic AI: Building Effective Agents

- Why Agents Are the Next Frontier of Generative AI

- Agents All the Way Down

- Huyen Chip: Agents

- What is an agent, and does your data need one?

- Google WhitePaper on Agents

- Agents Are Just Long-Running Jobs: A Pragmatic View of an Overhyped AI

- Prompting Guide

If you want to share any piece of this write-up, please cite it as:

@article{victorbrx,

title = {Understanding Agents: Improving prompts to enhance LLM planning and function calling},

author = {Regueira, Victor B.},

journal = {randomnoise.dev},

year = {2025},

month = {Jan},

url = {https://randomnoise.dev/blog/2025/01/25/understanding-agents-improving-prompts-to-enhance-llm-planning-and-function-calling/}

}

Stay Updated

Want to get notified about my latest posts and insights? Subscribe to my newsletter: